Disclaimer: The following is based on my personal opinion and interpretation – in no way does it represent those of my employer.

Confused about the Customer Data Platform (CDP) space? Me too, and I live and breathe it every day as a part of my job. The product marketing used by vendors, both old and new, doesn’t make it any easier for brands looking to buy a CDP. I’m writing this blog to help demystify terms and cut through the product marketing jargon. If you’re brand new to the concept of a CDP, I wouldn’t start here. Instead, I suggest starting here.

I’m going to break specific terms down into 3 main sections:

- Composability

- Real-time

- Identity Resolution

Any updates or changes to this blog will be explicitly dated above.

Composability

It’s important to start with composability because it’s one of the newest trends with CDP vendors. My preferred way of explaining composability is “bring your own data infrastructure”. The bulk of a brand’s data infrastructure is typically a cloud data platform, and for these use-cases, that’s typically Snowflake, Google BigQuery, AWS Redshift, or Databricks.

Reverse-ETL

Reverse-ETL is simply taking data from a database, or data platform, and pushing it in batch to a destination, most commonly a SaaS platform. The name comes from standard ETL (Extract, Transform, Load), which is commonly used to describe loading data from one database to another, or a SaaS platform to a database. All CDPs have had a flavor of this, but the term was popularized when the initial database that held the customer data was a data platform owned by brand, not a data platform owned by the CDP.

Composable CDP

The term “Composable CDP” is largely attributed to the vendor Hightouch, which gained popularity as a Reverse-ETL tool. You can learn more about their view here.

A somewhat silly analogy that I’m currently working on: When you buy a more “Packaged” CDP, you’re paying for a movie theater experience for your whole family - it’s a great customer experience, but if you go to the movies a lot, it can get pricey, especially if you buy the additional popcorn / snacks that they sell at a premium price every time.

A Composable CDP is a home theater that you put together under your own roof - some providers will sell you the whole setup that you put in your own home, others will just sell you the TV, the surround sound system, or the couch you sit on. You could theoretically switch out individual components of the setup, and it still works. You could even take the entire setup to a new home.

Confusion has come in heavily here as some traditional movie theaters are now offering individual components of their setup for your home theater and claiming to be “composable” because of it. In many cases, they’re still going to make you come to their theater for certain movies. You need to decide if you’re comfortable with that.

Zero-copy

Many CDPs, composable or otherwise, claim that they are “Zero-copy”, which can mean one of two things:

- The vendor is not persisting (i.e. keeping) the customer’s data in their infrastructure

- The customer doesn’t have to copy the data out of the vendor’s platform to query it – also known as data sharing.

Confusion sets in because if you peel back the top layer, both these could work very differently under the hood.

The first approach connects directly into the customer’s data platform while the second uses “data sharing”. Additional confusion appears because not all data sharing works the same (see Zero-ETL below).

Finally, some vendors that are truly Zero-copy use Zero-ETL to describe their solution.

At the end of the day, Zero-copy is all about restricting the movement of data from Point A to Point B. The goal is to enhance data collaboration and data security as customers are not forced to copy and move their data around.

Shoutout to the Segment team for helping me personally define a clear delineation between Zero-copy and Zero-ETL.

Zero-ETL

Zero-ETL is different from Zero-copy, as there is data movement involved.

Zero-ETL is nothing but “managed ETL”. The term was coined by AWS to describe moving data from AWS Aurora to AWS Redshift. Instead of a data engineer creating a data pipeline like they’re used to, it’s all managed by AWS directly – that is if you use both Redshift and Aurora.

The same applies to a CDP, or any other technology using Zero-ETL to operate on top of customer data. The vendor syncs all changes to the customer’s data (that lies within the customer’s data infra) to the vendor’s infra – and vice versa. This makes it so that the customer doesn’t have to create a data pipeline between the two infrastructures. Some vendors will also use “secure data sharing” to achieve Zero-ETL, but at the end of the day, that data is getting copied and persisted in another datastore.

Real-time

Everyone wants everything in Real-time™ and many CDPs brand themselves as a “Real-time Customer Data Platform”. It naturally sounds very cool and promising to folks, especially if they don’t understand the technical details and still live in FTP-hell.

The problem is that no one can agree on a definition of what “real-time” means. I have heard everything from milliseconds, to seconds, to minutes, and yes, even hours.

My opinion is that real-time should at the very least be single-digit seconds, and in some specific use-cases, be even faster with sub-second response. Anything measured in minutes or more should qualify as “near real-time”.

Finally, sometimes the CDP might have the real-time capabilities described below, but the downstream platform may not so it’s important to always check destination limitations.

Event Data Collection (i.e. real-time ingestion)

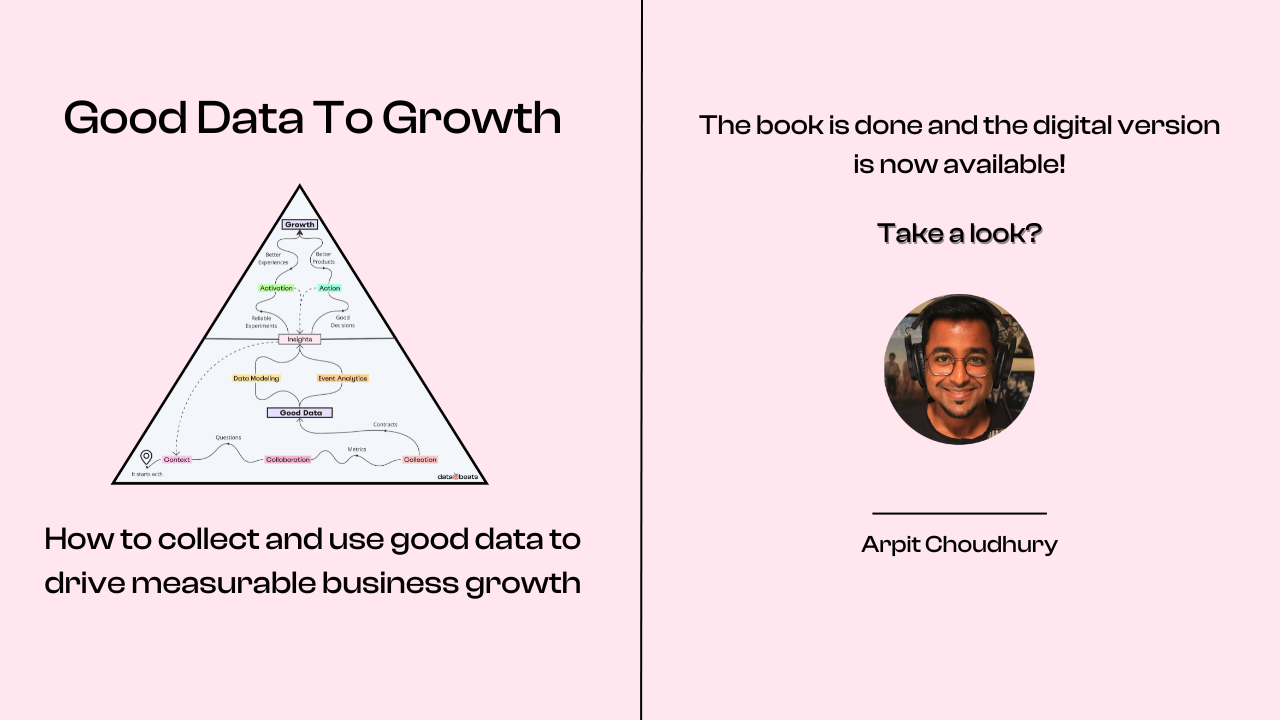

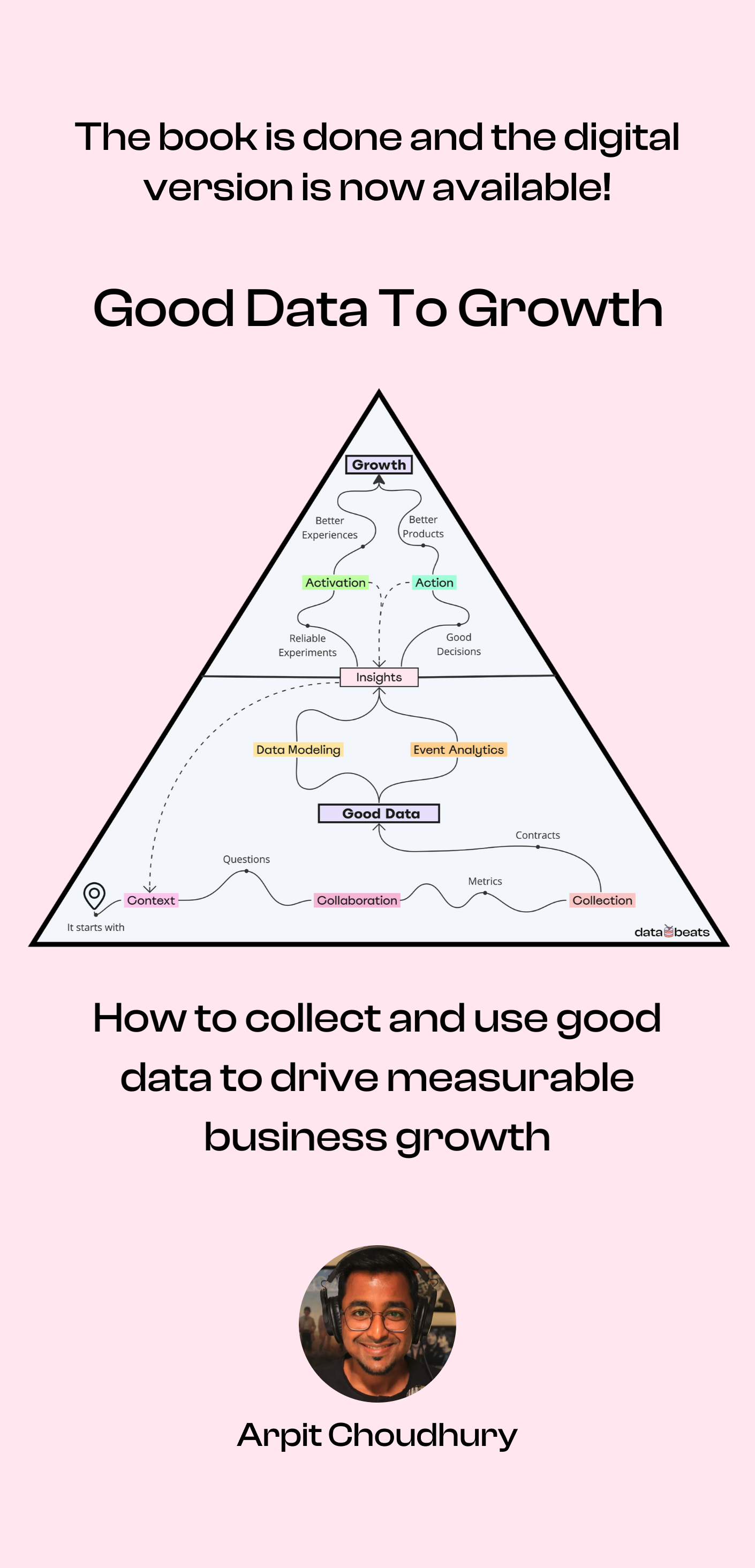

Event collection commonly refers to behavioral data collection – or tracking what a customer is doing on a brand’s website, mobile app, or other applications such as a gaming console or an OTT device. If you’d like to learn more about event data collection, this guide from Arpit is all you need.

Capturing behavioral data signals using a packaged solution is almost always real-time, but what happens thereafter depends on where the data goes after an event is captured; it typically goes to (one of) these three destinations:

- The vendor’s datastore(s)

- The customer’s datastore

- Direct to the destination (see Server to Server Event Routing below)

Today, 1 and 2 are typically “near real-time” (minutes), but there are some instances of it being “true real-time”. Number 3 is supposed to be true real-time.

Profile API (i.e. real-time serving)

This capability has many different names – I’ve seen Personalization API, Audience API, Activation API, etc. At its core, the use case is to do a real-time lookup on a user profile, and personalize their experience on the web, inside an app, or in a message (such as an email).

The process to create this real-time profile lookup is not real-time, and is typically a batched process; however, serving the actual profile happens in real-time.

This is where “sub-second” response is crucial, otherwise, you’re looking at slow page loads or huge bottlenecks in message creation and delivery.

Data platforms like Snowflake or Databricks don’t have this capability yet, but they intend to change that. On the other hand, most CDPs have this capability baked into their solution, but scalability is a common issue here. And some customers also choose to build and manage an API themselves to power use cases that rely on real-time serving.

Composable solutions typically sell this as an add-on, but the same scalability challenge applies there as well. Whether it's packaged or composable, any solution you buy off the shelf can get pricey at scale, especially if the brand has millions of customers.

Server to Server (S2S) Event Routing

When CDPs talk about being real-time, they mostly refer to their S2S event routing capability – in fact, this is where the CDP category effectively started.

Event routing takes place when a user action (or event) is instantly routed to an end destination, in order to trigger some automated workflow. It can be as simple as a profile update in a customer engagement platform (an email marketing tool), or something more critical such as removing a user who has made a purchase from a paid media destination.

S2S event routing is almost always real-time. There can be more “batched” event routing, but that is effectively the same as Reverse ETL as those events are batched into and out of a database. Real-time event routing to a SaaS platform skips a database; however, it might use the Profile API as a way to enrich the data before it gets routed to its final destination.

Streaming Reverse ETL

Update: 10/20/2023

The Census approach mentioned below is actually split between S2S and streaming Reverse ETL.

Census specifically can take in a Kafka or Materialize stream and their solution effectively works as a S2S Routing - the only delay will come from time enriching or transforming that data, but overall activation should happen in seconds. More delay will be present if using a data platform like Snowflake or Databricks, which is described below.

Original: 10/19/2023

This is the newest term, and the capability recently released by both Hightouch and Census. This is where “composable” data activation vendors leverage new functionality by data platforms (like a Dynamic Table in Snowflake, or a Streaming Table in Databricks) in near real-time. While data platforms have the ability to stream in data to them in true real-time, the transformation and activation process will highly depend on what each individual data platform can do, and how efficient it is at joining data from other tables.

There are pros and cons to this approach.

The best part about this is that creating a data pipeline from a streaming source is very straightforward; as a customer, you don’t need additional infrastructure outside of your data platform for transformation and enrichment like what is achievable today via S2S + Profile API.

It also theoretically scales really well, as you’re only working with incremental data; however, it’s not truly real-time. Realistically, you’re working in a frame of 1-5 minutes, not 1-5 seconds.

I wouldn’t recommend something like this for handling transactional messaging, like a forgot password, or a purchase confirmation.

Lastly, to work in single-digit minutes, you likely need your data platform resources to be “always on” – a significant cost for you, the customer. It’s important to weigh the cost of this emerging pattern against the cost of maintaining an S2S + Profile API solution.

Identity Resolution

As martech and adtech converge, identity has been caught in the center. Identity with first-party data in the martech world has traditionally been different from identity with third-party data on the adtech side. For a great deep-dive on this topic, I really like this explanation by Caleb from Amperity, but know that any time a vendor creates something like this, they have an opinionated view – this also applies to the blog you’re reading right now!

Identity Stitching

When CDPs have claimed identity resolution, here’s what has traditionally been sold:

Taking unknown website visitors, and converting them into known users once they create a profile and/or make a purchase. This has been very much tied to behavioral data collection, and building a profile in a CDP. It’s worth highlighting that a vendor really can’t provide identity stitching unless they are helping the customer capture events in the first place.

First-party Identity Resolution

Other CDPs got their start here. This is when the customer had first-party data in many different source systems, and all of that data had to be brought to a single place. From there, a vendor can tell that Luke living in Georgia in 2019 is the same Luke living in New Jersey in 2023 – as Luke has his same email address tied to both records.

There are two versions of this: deterministic and probabilistic.

Deterministic relies on matching on known identifiers – email, physical address, and so on. On the other hand, probabilistic matching can match on various fields, not just PII, and typically offers a confidence score on the likelihood of a match. Some vendors offer just deterministic, others offer both.

Third-party Identity Resolution

Third-party identity is when the customer brings in a vendor that has an identity graph from outside sources and partnerships.

As the customer, you might know a little about who Luke is, but maybe you don’t know enough to properly target him with paid ads. Using a third-party solution that can mix data on individuals as well as use data from their digital devices, you can expand your reach and optimize your paid media spend.

Recently, some of the composable solutions have been packaging this up on behalf of customers as part of their data activation workflows. This largely comes from enriching data with hashed email addresses (HEMs).

Conclusion

While things are confusing from a product marketing perspective, the good news is that everyone in this space is adding new and exciting features to make your life easier. When you’re looking at solutions, know what your main challenges and goals are, and ask for a POC or trial period that lets you prove out the solution meets your requirements. If you’re looking for where to start, Snowflake (my employer) puts out a great report every year.