This post is brought to you by Census.

{{line}}

Introduction

The quest for a single source of truth has always been the holy grail of marketing.

But what's the point of having data if it’s not useful and usable? The goal of collecting data is to drive business growth. And no matter how sophisticated the infrastructure, a Customer 360 built on top of a cloud data platform doesn’t generate revenue by itself – it has to be used to run better campaigns and experiments.

At Census, every company and brand we talk to is trying to reach a “nirvana” of real-time personalization. Similarly, every marketer has a “dream” campaign they want to run, but they’re blocked by a lack of data access or siloed martech systems that prevent them from getting the information they need. Unlocking these use cases is the true value of a Customer 360.

At the heart of the challenge is efficient customer data collection and management.

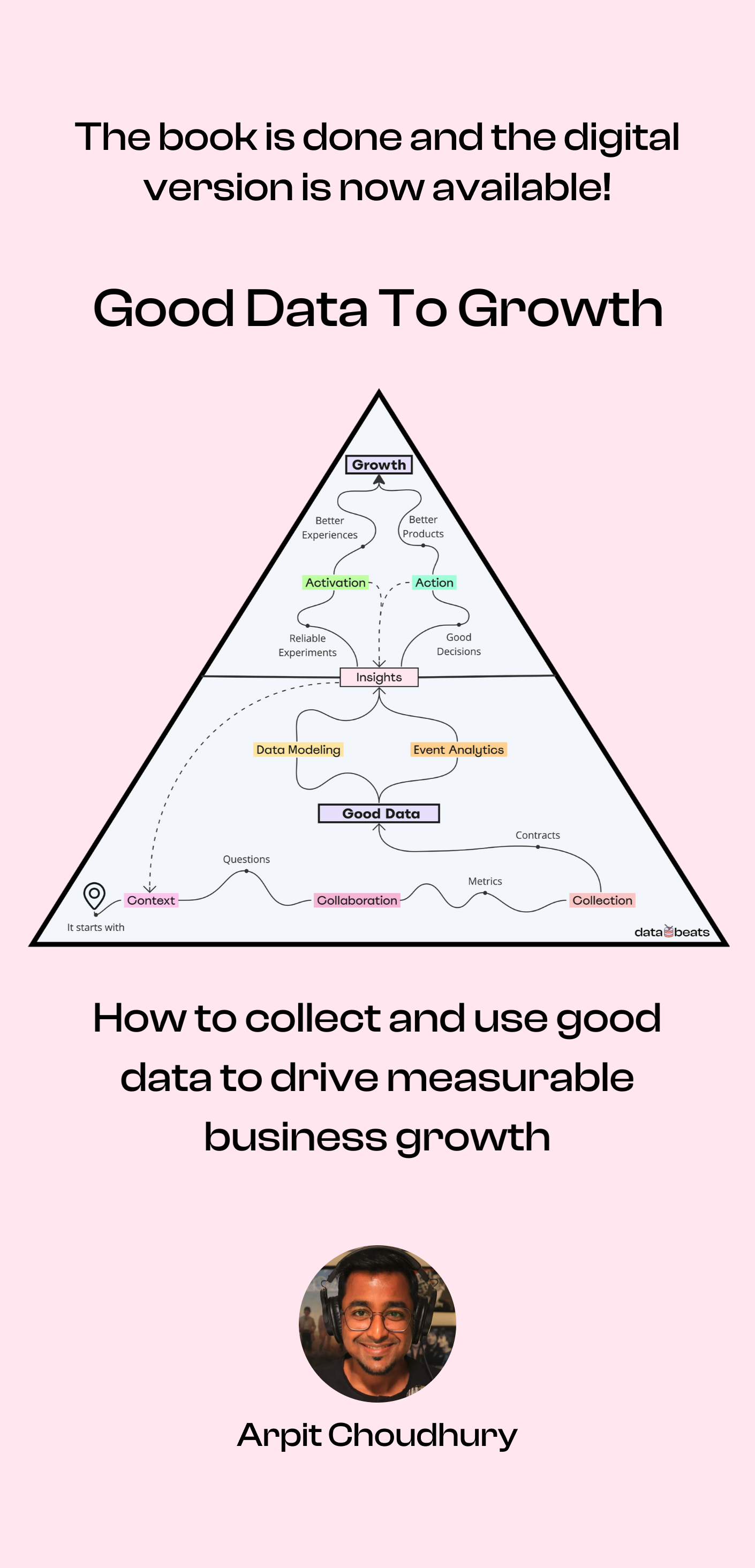

The good news is: this is a challenge that’s shrinking by the day. The speed, scale, and accuracy of data have begun to converge in a way that will genuinely enable marketers to achieve their dreams of real-time personalization.

In this article, I’ll discuss how two decades of advancements in data warehousing and SaaS technology have led us to today, where data can power better customer experiences more quickly and precisely than ever before.

If you enjoy hearing about cutting-edge data tools, you’ll be excited to learn about new real-time capabilities for data warehouses that have emerged within the past 6 months.

Practitioners who prefer seeing action over theory might appreciate real-world, real-time use cases, which I’ll discuss in the second half of the article.

Let’s dive in!

The evolution of customer data technology from 1999-2024

As I mentioned above, the convergence of data speed, scale, and accuracy only became possible within the past 6 months. Data technology has matured just in time for the proliferation of AI, which requires large volumes of fresh, high-quality data.

Another theme that’s driven innovation over the past two decades is the usability of data. To generalize, data people curate good data for the sake of having it and business people use data without worrying about where it comes from. Both types of people are essential for continuous improvement. Today’s generation of customer data technology enables more collaboration between data and business people than I’ve ever seen before.

1999-2012: Building an accurate source of truth

Since the dawn of time, whether customer transactions were etched in stone or tracked on papyrus scrolls, businesses have struggled with the complexities of collecting, storing, and accessing a golden record of their customers.

In the era of Software as a Service (SaaS), companies centralized their customer data using CRM platforms (like Salesforce) and Customer Data Platforms (CDPs). These were great for quick reactions, driving transactions, and being interactive for business users. However, they weren’t able to manage the explosion of big data because their underlying databases weren’t built for scale.

On the other hand, data and Business Intelligence (BI) teams emerged, optimized for the other end of the spectrum – focusing on completeness over speed. This resulted in slow but thorough systems with an arsenal of tools around correctness, data management, and governance.

However, integrating CRMs and CDPs with these new data systems was like asking a dragon to share its gold. It was difficult for business teams and their tools to work with unwieldy data technology.

This divide highlighted the need for a solution that could blend the agility of CRMs/CDPs with the robustness of big data platforms.

2012-2020: Scaling data storage and processing power

Innovations in cloud computing revolutionized data storage and processing at scale. Amazon Redshift came to market as the first cloud data warehouse, but it was hindered by limitations in storage and compute power.

Soon, gamechangers like Snowflake and Google BigQuery stepped in, decoupling compute and storage to unlock a whole new slew of use cases. They provide virtually unlimited and cost-effective storage, ideal for handling vast amounts of data with great accuracy. They also pioneered the ability to tailor computing resources to specific tasks, allowing different workflows with different needs to run independently. These machines are inherently powerful with the ability to scale up or down the capacity needed for specific tasks. For example, ML processing needs a high-capacity machine for 20 minutes, whereas BI and analytics need medium capacity for 24 hours.

In the past 5 to 7 years, Snowflake, BigQuery, and Databricks have seen explosive growth (Snowflake’s $3.4B IPO in 2020 was one of the largest software IPOs in history) because of their breakthrough in allowing applications to scale compute and storage resources infinitely, enabling apps to handle any workload. To use a radio analogy, they invented a “knob” for computing power that can be turned up or down at any time. We’ll come back to this analogy soon.

2020-2024: Collaborating between data and business

This new infrastructure for data storage and processing sparked a movement to bridge the gap between business operations and customer data. We spearheaded the movement when we started Census in 2018, but it would have happened anyway.

Data Activation and Reverse ETL tools enable business teams to leverage data directly from the cloud data warehouse for personalization, growth, and sales, without needing to duplicate data in a CRM or MAP. For marketers, building a Composable CDP on the warehouse transforms data infrastructure into a growth engine with much greater cost savings and flexibility than conventional CDPs.

Despite these advancements, a critical challenge remained – real-time reactivity and low latency.

Traditionally, data warehouses update on a schedule, such as every 24 hours. Their batch-based architecture is designed for joining and aggregating large batches of data, not for real-time processing. Marketers who needed high-speed action like abandoned cart, inventory management, and lead routing couldn’t adopt the Composable CDP for all their use cases.

This latency was one of the last barriers preventing some marketing teams from unleashing the full power of the data warehouse for their operations.

2024 and beyond: Speed is the last frontier

The journey towards real-time data has just begun.

This year, Census became the first to enable real-time Reverse ETL on top of the warehouse. Using a real-time data source like Confluent, Kafka, Materialize, Snowflake Streams, or even HTTP, our Live Syncs can trigger actions in downstream tools in milliseconds.

Up until now, you couldn’t use the data warehouse as the source for event-based workflows where there’s a trigger followed by one or more actions (similar to Zapier Zaps or Make Scenarios). This speed breakthrough means that every use case is now possible with the data warehouse. Latency was the last barrier that blocked a singular data stack from covering all the workloads of your business.

To return to our radio analogy, you now have a “latency knob” – like you had a “power knob” before – to control how fast you want your data.

.jpg)

Sure, not every workflow needs to be real-time, but now everyone has infinite possibilities to explore in the realm of real-time data activation. In the past, specialized industries like mobile gaming or travel valued speed so highly that they went out of their way to build custom infrastructure. Now, it’s possible for any marketing team to implement high-speed use cases like geotargeting, cart abandonment, or lead routing — the only limit is your imagination. 🌈

Real-world, real-time use cases

Real-time technology is so new that business use cases haven’t quite caught up to the possibilities yet. I predict over the next 2-3 years, companies will make their critical customer interactions faster and fresher with real-time data.

Here are a few use cases in different industries we’ve helped our customers with, to inspire you with the art of the possible.

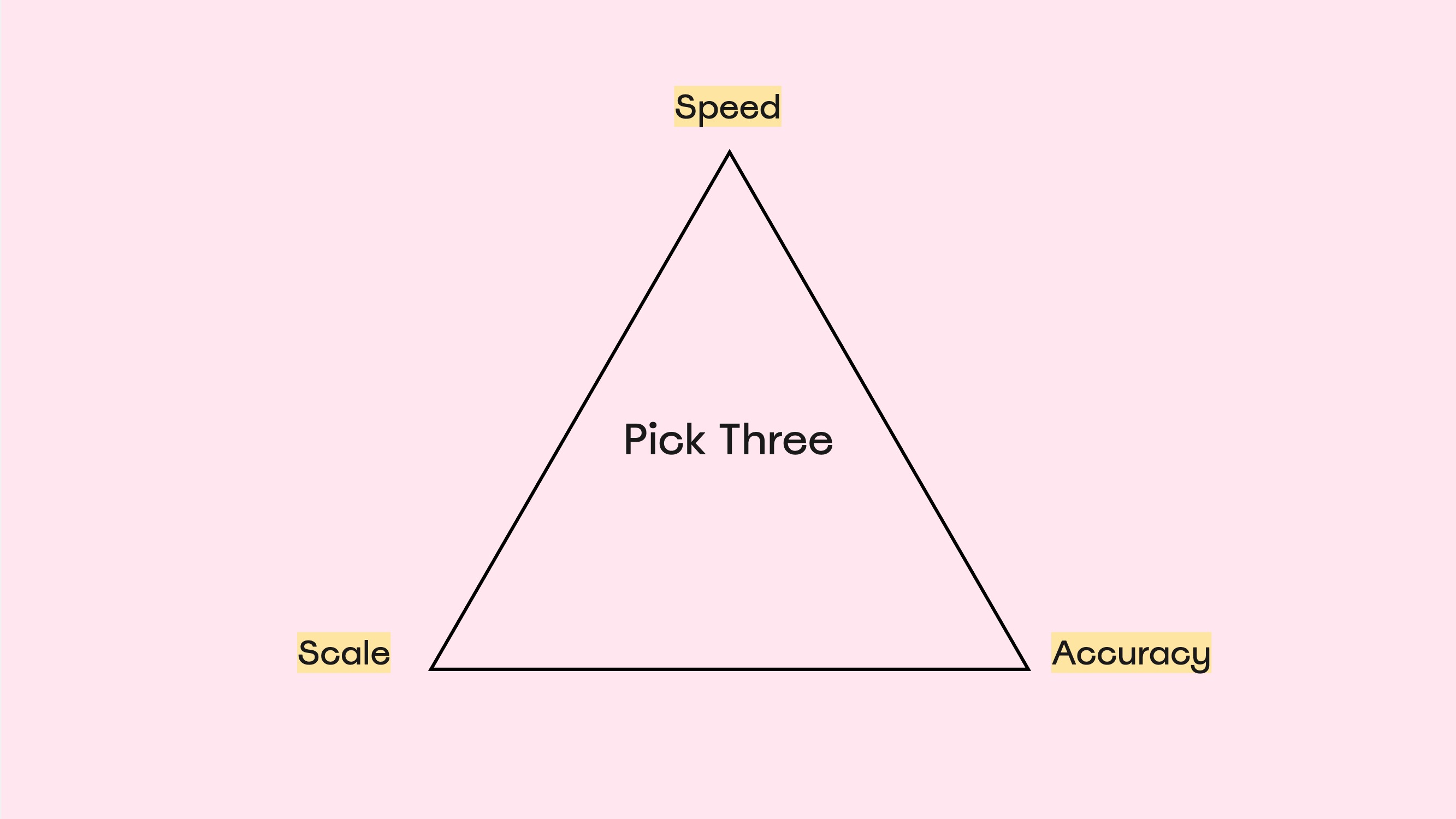

Retail: Real-time inventory management

Retailers don't want customers to be frustrated by "out-of-stock" listings and they don't want to overstock items that aren't popular. During a webinar with Confluent and Materialize, we demoed a real-time use case for eCommerce stores.

This is an example of how real-time streaming helps stores manage inventory more efficiently:

- First, purchases in Shopify are sent via webhooks to Materialize, an operational data warehouse that processes data in real time.

- Materialize joins inventory and order data, immediately identifying orders with out-of-stock items.

- When an out-of-stock order happens, Census automatically triggers personalized emails via Braze to give affected customers accurate shipping estimates. Census also sends a fulfillment order to the item supplier, all in real time.

This entire flow takes just a few seconds. It becomes exponentially more valuable when a retailer needs to unify purchases from multiple channels, like Amazon, Instagram, and Walmart. If this has piqued your interest, I recommend watching the full session here.

SaaS: Real-time app personalization

Mezmo is an observability platform that wanted to trigger dynamic onboarding using Intercom, such as showing tailored guides or highlighting specific features based on user persona data and real-time user behavior. They needed to engage a user within their first 3 minutes in the Mezmo product; failing to do so would likely result in the user abandoning the onboarding flow.

Mezmo had crucial customer information in Snowflake, including user persona and company size, but didn’t have a way to combine those insights with user behavior in real time – their data latency was 15+ minutes, preventing them from proactively engaging users.

They had no real-time infrastructure available, and like most growing startups, couldn’t afford to let software engineers take on data workflows. The solution was real-time Reverse ETL with Census Live Syncs, which enabled Mezmo to activate customer data from Snowflake into Intercom with sub-second latency. Now, Mezmo’s growth team can engage users in real time inside the app, at the moment of intent, resulting in a better onboarding experience and a higher activation rate.

Travel: Real-time schedule updates

Trainline is Europe's leading train and bus app that helps travelers search, book, and manage their journeys across millions of routes, 270 carriers, and 45 countries.

They need to notify passengers in real time as soon as key information changes – for example, if a train has changed platforms, a bus is delayed, or if there’s some other issue that would affect a traveler’s itinerary.

Until now, the only way to build real-time workflows was for the software engineering team to implement complex infrastructure with Kafka or Confluent, which can take several months or in certain cases, even years. However, using the real-time activation capabilities offered by Census, it’s now possible for an analytics engineer or product manager to set up real-time use cases, with very little or no help from their engineering counterparts.

Conclusion

The convergence of speed, scale, and accuracy in data is finally here. It’s been a decades-long journey, from early data platforms only serving technical users to today’s singular customer data stack that also caters to the needs of data-adjacent teams like Product, Marketing, and Growth.

We’re embarking on a whole new world of possibilities where the nature of an organization’s data infrastructure no longer prevents anyone from building real-time personalized experiences and running real-time growth experiments.

If you have thoughts, I’d love to chat! You can reach me on LinkedIn or email me at boris@getcensus.com.